In our most recent SEO office hours session we discussed the question uttered (often in panic) by stakeholders and SEOs alike:

In our most recent SEO office hours session we discussed the question uttered (often in panic) by stakeholders and SEOs alike:

Why Is My F@#k%*g SEO Tanking?!

We’ll recap the topics LSG’s Andrew Shotland, CEO, and Karl Kleinschmidt, VP of SEO Strategy, covered like:

- How SEOs currently analyze a traffic drop without big data

- What automated tagging can do to simplify figuring out traffic drops

- Automated tagging: a step-by-step guide

- A case study of how automated tagging helped our client

For this post, we’ll dive straight into the automated tagging portion of the SEO office hours session. However, we also talked about 7 simple hacks for diagnosing traffic drops which you can read about in our newsletter, or you can watch the full SEO office hours video below.

How SEOs Currently Analyze a Traffic Drop Without Big data

If you’ve had a sudden drop then you’ll need to find out what’s changed. To analyze what changed on your site it’s likely you’ll need some big data manipulation but you should understand how SEOs currently analyze a traffic drop without big data to start then we’ll dive into that.

Without big data, you are likely going to be tracking a small set of keywords using 3rd party rank trackers and/or using small samples of your keywords in GSC.

The problems with this:

- You have to manually go through the data and catch the patterns so you might miss something

- The cost of rank tracking can grow pretty fast using 3rd party trackers with large amounts of keywords

- Even if you use free tools like GSC then this can take a long, long time when it’s manually done

What Automated Tagging Can Do to Simplify Figuring Out Traffic Drops

So, what’s the better way to do this? Automated tracking. By using automated tagging for categories, intent, and page type you can:

- Easy to find patterns in traffic drops

- Better understand ongoing traffic

- Retain knowledge from past analyses

- Make it easier to predict the impact of future SEO projects

The approach with automated tagging

First, we tag all the keywords in GSC in 3 buckets: categories, intent, and page types.

For example, for page types, 1 page type could be product pages so we tag any page that has “.com/p/” in the URL.

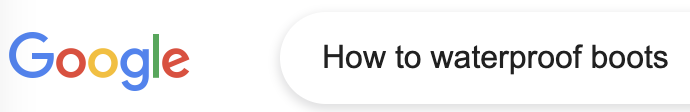

An example of intent would be any queries that contain question words like what, how, who, etc.

Lastly, a category example might be the keyword shoes or shoe so we’d tag all queries containing shoe or shoes.

This approach allows you to store and tag all Google search console traffic for the last 16th months and going forward every time you pull GSC data. You can save the data in Google Cloud which lets you have the largest possible body of data to analyze instead of analyzing samples of your total GSC data.

This can make a big difference in how efficiently you can find patterns in the data on the day traffic tanks.

Automated tagging is particularly helpful because you save the knowledge from previous analyzes. So, if you’re an agency working with multiple clients you don’t need to remember a weird pattern, content release issue, etc. that occurred 9 months ago.

Just tag it and save yourself a lot of time and headache.

Automated Tagging Best Practices

- Tag keywords patterns: Tag any patterns you find in your keywords: the more patterns you have, the better.

- Use regex: It’s also helpful to use regular expressions (regex) to tag instead of just “if it contains”. This can give you a lot more options.

- Tag industry-specific groups: Tag anything specific to your industry i.e pages, keywords groups you know are relevant like:

-

- Brands for eCommerce

- Taxonomy levels for eCommerce

- Blog categories for a blog

- Sales funnel levels (TOFU, MOFU, BOFU)

- City/States for location-based businesses

- Use negative keywords if necessary: You may want to filter out brand vs non-branded keywords. For example, if you work on Nike’s site you might want a category tagged as “shoes” but NOT “Nike shoes” if you care more about unbranded.

- Start Tagging Now: Don’t wait til you have a traffic drop, the earlier you start, the more data you have.

The Steps For Automated Tagging

- First, you can do an n-gram analysis of URLs and sort by traffic. You want to tag the directories and subdirectories.

What the hell does that mean?

Basically, an n-gram analysis just splits the URL into its individual parts (i.e directories and subdirectories).

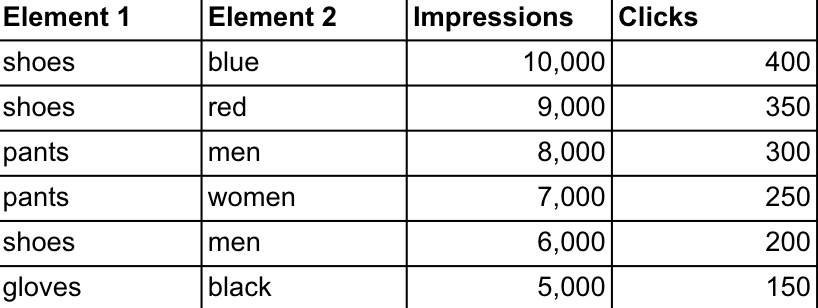

So, you see in the graph above, element 1 is /p/ and element 2 is /shoes/. In this analysis, /p/ are product pages, and /shoes/ are pages with shoes.

So, we can tag this page type as shoe product pages. Same with pants, gloves, jackets, etc.

Where element 1 is /blog/ and element 2 is /shoes/, you guessed it, these are blogs about shoes, so we tag that too. /s/ is search pages about Nike and you get the idea.

See! Not that scary.

- Then, do an n-gram analysis of keywords and sort by traffic. Here, you want to tag the categories and intent.

So, we do the same analysis for queries— any queries containing “shoe” or “shoes” as well as colors and gender. We can do this with regex statements as anything that’s “red”, “blue”, “black”, etc. gets tagged as a color category.

- Next, go through keywords with no tags, sorted by traffic and tag categories/intent.

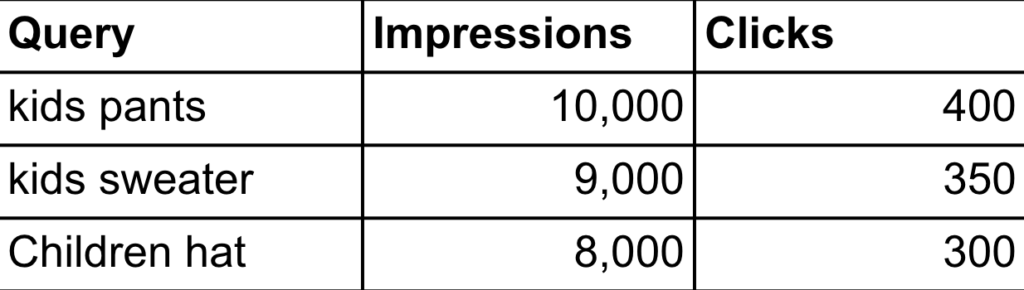

Once you get through the obvious groups, you may notice patterns like this one. So, here we can see group “kids pants” and “children hat” are uncategorized.

The best thing to do would be to create a regex statement so things like “kids”, “kid”, “children”, or “children’s” are one tag since they are synonymous.

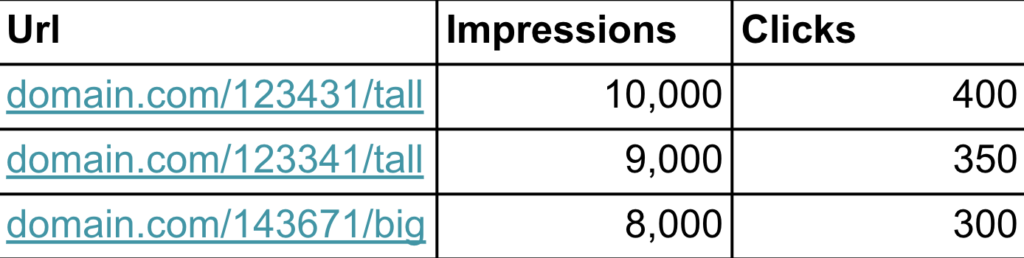

- Furthermore, you can go through pages with no tags, sorted by traffic and tag page types.

Because this URL ends in “tall” and “big” we know these pages are pages that relate to size. So, we can use a regex statement to group these together too as a size page type.

Tools We Use for Automated Tagging

- URL Ngram Tool

- Keyword Ngram Tool

- Search Console Tagging Tool

- Bigquery

- Google Cloud

- Tableau

N-gram tools in combination with the Search Console tagging tool allow us to find the patterns and tag them. We’ve built one internally but there are free ones available.

Once we have the data, it’s uploaded into the Google cloud and we use Bigquery to access it. From there, you can use Tableau or Google Data Studio for data manipulation.

Case Study: How Automated Tagging Helped Our Client

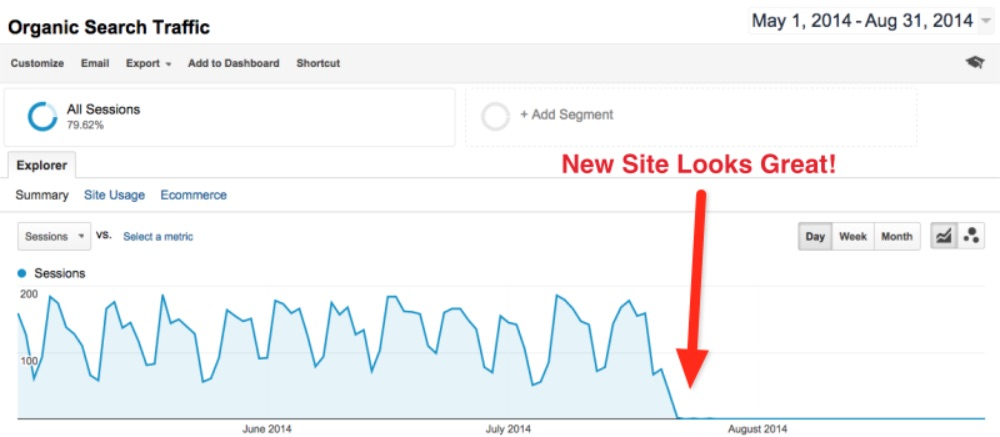

Okay, to make the benefit of tagging more concrete, let’s walk through a real case study of how this has helped us quickly figure out a traffic drop.

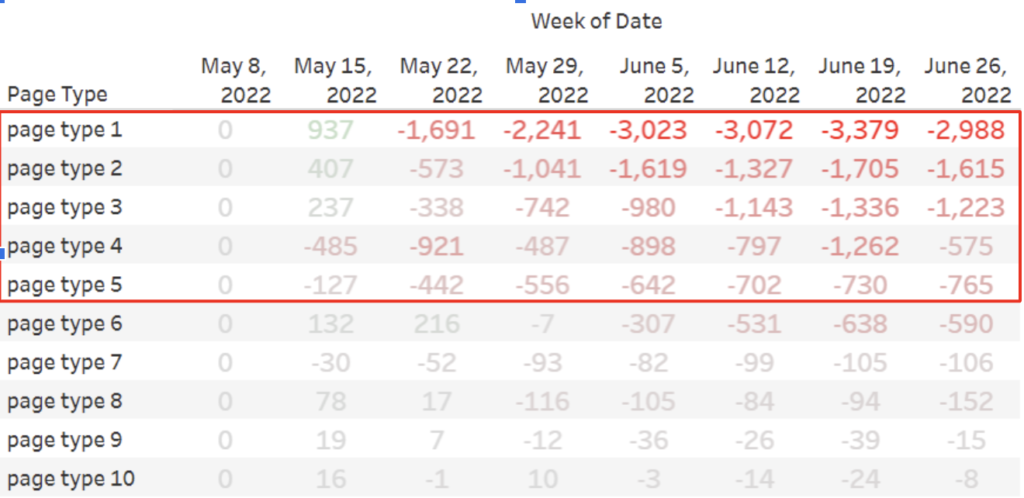

Our client was an eCommerce site hit by the May Google Core Update. We started tagging after the drop and found 5 major patterns in the ranking drops.

These tables show the difference in clicks from 2 weeks before the update.

Page Types (e.g product pages, search pages, blogs)

As you can see page type 1 is the hardest hit but also page types 2-5.

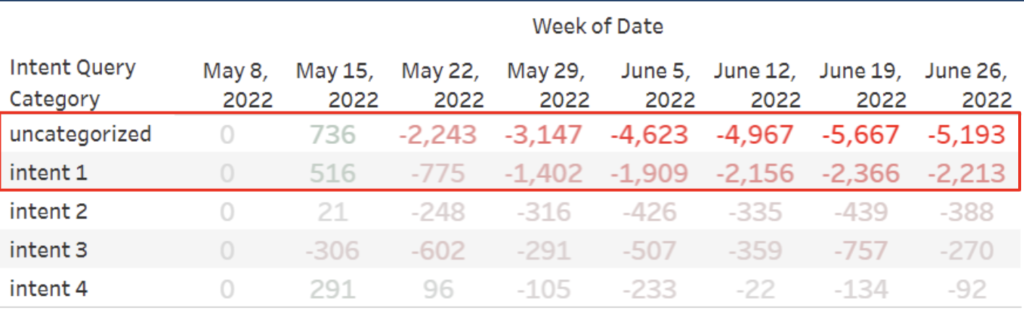

Intent Queries (e.g purchase, questions, near me)

Intent 1 got a big dip but also uncategorized intent or keywords without a known intent tag. So this let us know we needed to look further into these uncategorized keywords to identify the drop and also let us start excluding a ton of tagged keywords outside of this bucket.

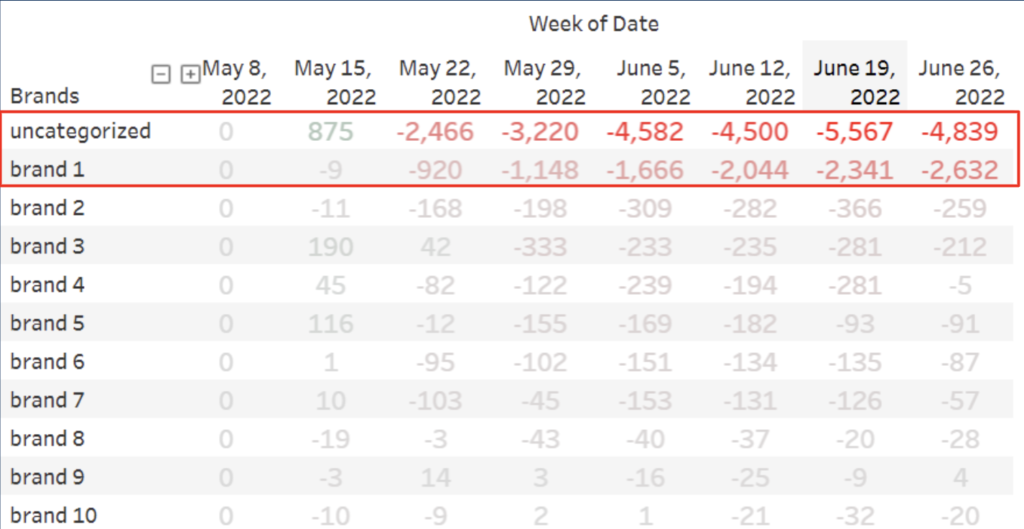

Brands (e.g Nike, LG, etc.)

Again, we see that the uncategorized section is hit hardest while Brand 1 was also strongly affected.

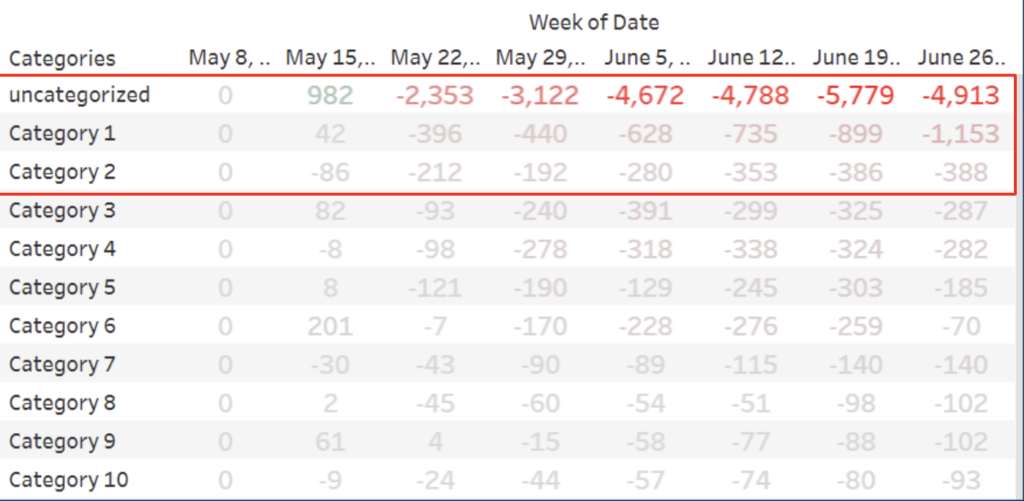

Categories (e.g Shoes, cities)

For categories, we need to look into categories 1 and 2 as well as the uncategorized portion of queries.

So far in our investigation, we’ve noticed a lot of uncategorized keywords have been the most impacted in our intent queries, brands, and categories so we need to drill down on those.

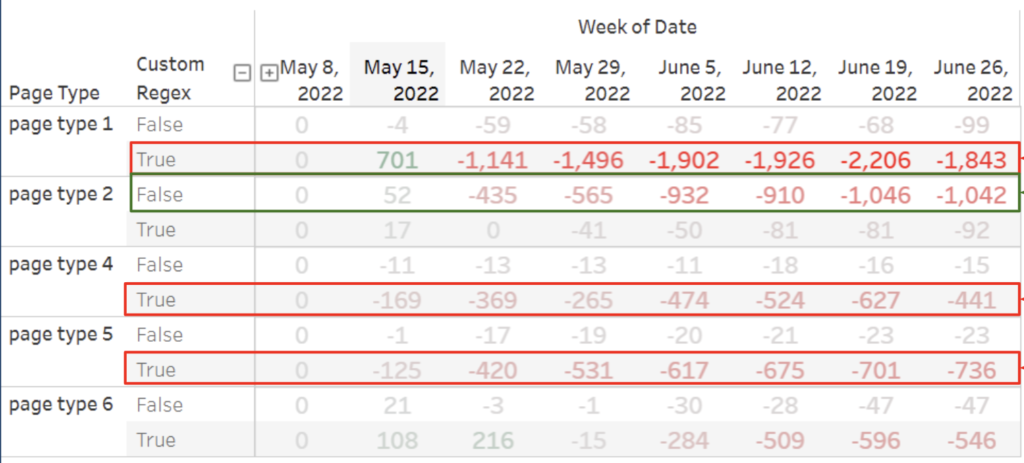

Keywords with Unknown Category

Here, we applied our page types to our unknown categories with a custom regex statement specific to this client. For the keywords with unknown categories that dropped in ranking, pages 1, 4, and 5 represent the majority of the drop.

Keywords with Unknown Brands

We did the same thing here for unknown Brands.

We used a regex statement to find where it was true and false when we overlaid our page types.

As you can see, it’s true for page types 1, 4, and 5 while it’s false for page type 2. So for page type 2, we know we need to find keywords that contain that page type but where the regex statement is false.

This cuts down the list from all keywords (1000s in this case) to a few dozen we need to run manually through GSC.

Keyword with Unknown Intent

Again, looking at query intent we can see issues with page types 1,4, and 5 but the regex statement is false on page type 2. We can take this information and narrow the list of keywords down for page type 2 like we did for unknown brands.

Now, we have a prioritized list of keyword patterns in ranking drops and a list of action items:

- Investigate Page type 1,3,4,5 where Regex Statement is true

- Investigate page type 2 where Regex Statement is not true

- Investigate Intent 1

- Investigate Brand 1

- Investigate Category 1 and 2

Because you know how much they dropped in traffic this gives us the ability to know what to send to developers first while you investigate the additionally smaller patterns.

If you want more SEO insights can subscribe to our LinkedIn newsletter to get hot takes, new SEO research, and a treasure trove of useful content.

The post Live Event Recap – SEO Office Hours: How to Use Automated Tagging To Diagnose Traffic Drops appeared first on Local SEO Guide.

Recent Comments