We’ve said it way back when, but we’ll repeat it: it keeps amazing us that there are still people using just a robots.txt files to prevent indexing of their site in Google or Bing. As a result, their site shows up in the search engines anyway. Do you know why it keeps amazing us? Because robots.txt doesn’t actually do the latter, even though it does prevent indexing of your site. Let me explain how this works in this post.

For more on robots.txt, please read robots.txt: the ultimate guide. Or, find the best practices for handling robots.txt in WordPress.

There is a difference between being indexed and being listed in Google

Before we explain things any further, we need to go over some terms here first:

- Indexed / Indexing

The process of downloading a site or a page’s content to the server of the search engine, thereby adding it to its “index.” - Ranking / Listing / Showing

Showing a site in the search result pages (aka SERPs).

So, while the most common process goes from Indexing to Listing, a site doesn’t have to be indexed to be listed. If a link points to a page, domain, or wherever, Google follows that link. If the robots.txt on that domain prevents indexing of that page by a search engine, it’ll still show the URL in the results if it can gather from other variables that it might be worth looking at.

In the old days, that could have been DMOZ or the Yahoo directory, but I can imagine Google using, for instance, your My Business details these days or the old data from these projects. More sites summarize your website, right.

Now if the explanation above doesn’t make sense, have a look at this video explanation by ex-Googler Matt Cutts from 2009:

If you have reasons to prevent your website’s indexing, adding that request to the specific page you want to block like Matt is talking about, is still the right way to go.

But you’ll need to inform Google about that meta robots tag. So, if you want to hide pages from the search engines effectively, you need them to index those pages. Even though that might seem contradictory. There are two ways of doing that.

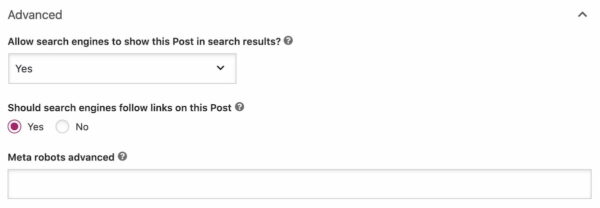

Prevent listing of your page by adding a meta robots tag

The first option to prevent the listing of your page is by using robots meta tags. We’ve got an ultimate guide on robots meta tags which is more extensive, but it basically comes down to adding this tag to your page:

<meta name="robots" content="noindex,nofollow">

If you use Yoast SEO, this is super easy! No need to add the code yourself. Learn how to add a noindex tag with Yoast SEO here.

The issue with a tag like that though, is that you have to add it to each and every page.

Or by adding a X-Robots-Tag HTTP header

To make the process of adding the meta robots tag to every single page of your site a bit easier, the search engines came up with the X-Robots-Tag HTTP header. This allows you to specify an HTTP header called X-Robots-Tag and set the value as you would the meta robots tags value. The cool thing about this is that you can do it for an entire site. If your site is running on Apache, and mod_headers is enabled (it usually is), you could add the following single line to your .htaccess file:

Header set X-Robots-Tag "noindex, nofollow"

And this would have the effect that that entire site can be indexed. But would never be shown in the search results.

So, get rid of that robots.txt file with Disallow: / in it. Use the X-Robots-Tag or that meta robots tag instead!

Read more: The ultimate guide to the meta robots tag »

The post Preventing your site from being indexed, the right way appeared first on Yoast.

Recent Comments