Anyone who’s ever been a teenager is likely familiar with the question, “Why aren’t you doing something productive?” If only I knew, as an angsty 15-year-old, what I know after conducting the research for this article. If only I could respond to my parents with the brilliant retort, “You know, the idea of productivity actually dates back to before the 1800s.” If only I could ask, “Do you mean ‘productive' in an economic or modern context?”

Back then, I would have been sent to my room for “acting smart.” But today, I'm a nerdy adult who is curious to know where today's widespread fascination with productivity comes from. There are endless tools and apps that help us get more done — but where did they begin?

If you ask me, productivity has become a booming business. And it's not just my not-so-humble opinion — numbers and history support it. Let's step back in time, and find out how we got here, and how getting stuff done became an industry.

What Is Productivity?

The Economic Context

Dictionary.com defines productivity as “the quality, state, or fact of being able to generate, create, enhance, or bring forth goods and services.” In an economic context, the meaning is similar — it’s essentially a measure of the output of goods and services available for monetary exchange.

How we tend to view productivity today is a bit different. While it remains a measure of getting stuff done, it seems like it’s gone a bit off the rails. It’s not just a measure of output anymore — it’s the idea of squeezing every bit of output that we can from a single day. It’s about getting more done in shrinking amounts of time.

It’s a fundamental concept that seems to exist at every level, including a federal one — the Brookings Institution reports that even the U.S. government, for its part, “is doing more with less” by trying to implement more programs with a decreasing number of experts on the payroll.

The Modern Context

And it’s not just the government. Many employers — and employees — are trying to emulate this approach. For example, CBRE Americas CEO Jim Wilson told Forbes, “Our clients are focused on doing more and producing more with less. Everybody's focused on what they can do to boost productivity within the context of the workplace.”

It makes sense that someone would view that widespread perspective as an opportunity. There was an unmet need for tools and resources that would solve the omnipresent never-enough-hours-in-the-day problem. And so it was monetized to the point where, today, we have things like $25 notebooks — the Bullet Journal, to be precise — and countless apps that promise to help us accomplish something at any time of day.

But how did we get here? How did the idea of getting stuff done become an industry?

A Brief History of Productivity

Pre-1800s

Productivity and Agriculture

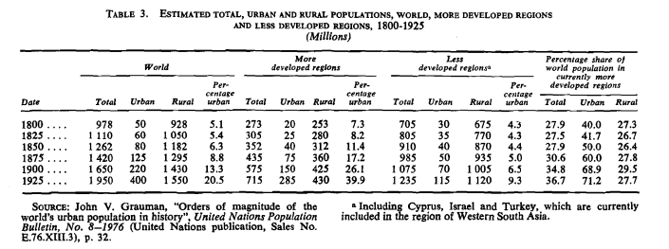

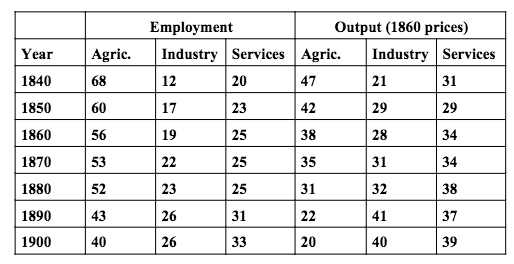

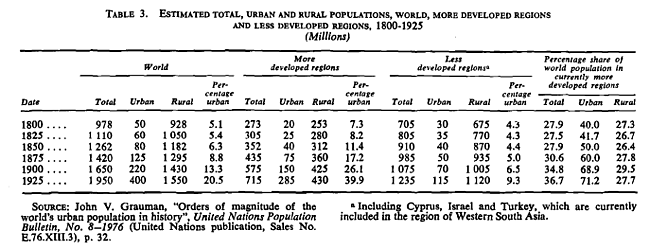

In his article “The Wealth Of Nations Part 2 — The History Of Productivity,” investment strategist Bill Greiner does an excellent job of examining this concept on a purely economic level. In its earliest days, productivity was largely limited to agriculture — that is, the production and consumption of food. Throughout the world around that time, rural populations vastly outnumbered those in urban areas, suggesting that fewer people were dedicated to non-agricultural industry.

Source: United Nations Department of International Economic and Social Affairs

On top of that, prior to the 1800s, food preservation was, at most, archaic. After all, refrigeration wasn’t really available until 1834, which meant that crops had to be consumed fast, before they spoiled. There was little room for surplus, and the focus was mainly on survival. The idea of “getting stuff done” didn’t really exist yet, suppressing the idea of productivity.

The Birth of the To-Do List

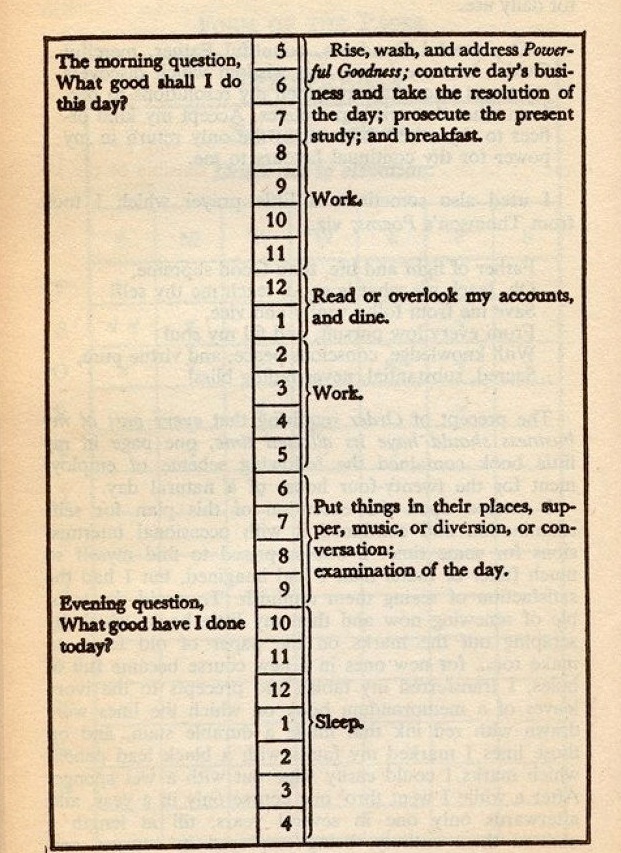

It was shortly before the 19th century that to-do lists began to surface, as well. In 1791, Benjamin Franklin recorded what was one of the earliest-known forms of it, mostly with the intention of contributing something of value to society each day — the list opened with the question, “What good shall I do this day?”

Source: Daily Dot

The items on Franklin’s list seemed to indicate a shift in focus from survival to completing daily tasks — things like “dine,” “overlook my accounts,” and “work.” It was almost a precursor to the U.S. Industrial Revolution, which is estimated to have begun within the first two decades of the nineteenth century. The New York Stock & Exchange Board was officially established in 1817, for example, signaling big changes to the idea of trade — society was drifting away from the singular goal of survival, to broader aspirations of monetization, convenience, and scale.

1790 – 1914

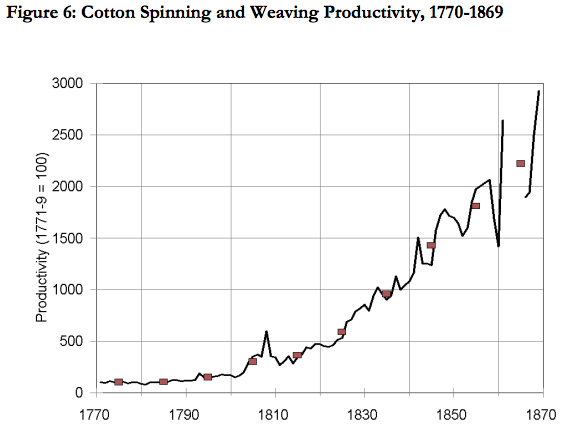

The Industrial Revolution actually began in Great Britain in the mid-1700s, and began to show signs of existence in the U.S. in 1794, with the invention of the cotton gin — which mechanically removed the seeds from cotton plants. It increased the rate of production so much that cotton eventually became a leading U.S. export and “vastly increased the wealth of this country,” writes Joseph Wickham Roe.

Source: Gregory Clark

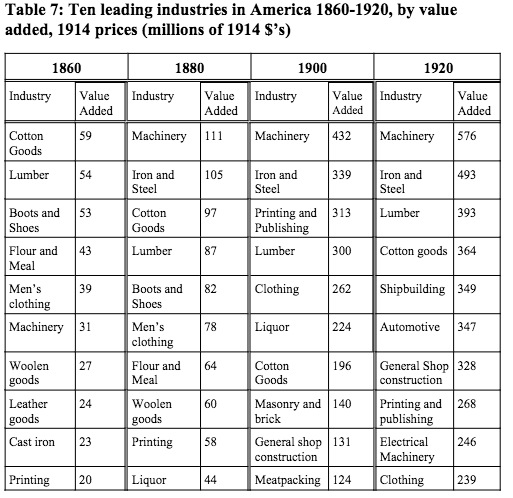

It was one of the first steps in a societal step toward automation — to require less human labor, which often slowed down production and resulted in smaller output. Notice in the table below that, beginning in 1880, machinery added the greatest value to the U.S. economy. So from the invention of the cotton gin to the 1913 unveiling of Ford’s inaugural assembly line (note that “automotive” was added to the table below in 1920), there was a common goal among the many advances of the Industrial Revolution: To produce more in — you guessed it — less time.

Source: Joel Mokyr

1914 – 1970s

Pre-War Production

Source: Joel Mokyr

Advances in technology — and the resulting higher rate of production — meant more employment was becoming available in industrial sectors, reducing the agricultural workforce. But people may have also become busier, leading to the invention and sale of consumable scheduling tools, like paper day planners.

According to the Boston Globe, the rising popularity of daily diaries coincided with industrial progression, with one of the earliest known to-do lists available for purchase — the Wanamaker Diary — debuting in the 1900s. Created by department store owner John Wanamaker, the planner’s pages were interspersed with print ads for the store’s catalogue, achieving two newly commercial goals: Helping an increasingly busier population plan its days, as well as advertising the goods that would help to make life easier.

.jpg?width=371&name=Wanamaker_Diary_TP2 (1).jpg)

Source: Boston Globe

World War I

But there was a disruption to productivity in the 1900s, when the U.S. entered World War I, from April 1917 to the war’s end in November 1918. Between 1918 and at least 1920 both industrial production and the labor force shrank, setting the tone for several years of economic instability. The stock market grew quickly after the war, only to crash in 1929 and lead to the 10-year Great Depression. Suddenly, the focus was on survival again, especially with the U.S. entrance into World War II in 1941.

Source: William D. O'Neil

But look closely at the above chart. After 1939, the U.S. GDP actually grew. That’s because there was a revitalized need for production, mostly of war materials. On top of that, the World War II era saw the introduction of women into the workforce in large numbers — in some nations, women comprised 80% of the total addition to the workforce during the war.

World War II and the Evolving Workforce

The growing presence of women in the workforce had major implications for the way productivity is thought of today. Starting no later than 1948 — three years after World War II’s end — the number of women in the workforce only continued to grow, according to the U.S. Department of Labor.

That suggests larger numbers of women were stepping away from full-time domestic roles, but many still had certain demands at home — by 1975, for example, mothers of children under 18 made up nearly half of the workforce. That created a newly unmet need for convenience — a way to fulfill these demands at work and at home.

Once again, a growing percentage of the population was strapped for time, but had increasing responsibilities. That created a new opportunity for certain industries to present new solutions to what was a nearly 200-year-old problem, but had been reframed for a modern context. And it began with food production.

1970s – 1990s

The 1970s and the Food Industry

With more people — men and women — spending less time at home, there was a greater need for convenience. More time was spent commuting and working, and less time was spent preparing meals, for example.

The food industry, therefore, was one of the first to respond in kind. It recognized that the time available to everyone for certain household chores was beginning to diminish, and began to offer solutions that helped people — say it with us — accomplish more in fewer hours.

Those solutions actually began with packaged foods like cake mixes and canned goods that dated back to the 1950s, when TV dinners also hit the market — 17 years later, microwave ovens became available for about $500 each.

But the 1970s saw an uptick in fast food consumption, with Americans spending roughly $6 billion on it at the start of the decade. As Eric Schlosser writes in Fast Food Nation, “A nation’s diet can be more revealing than its art or literature.” This growing availability and consumption of prepared food revealed that we were becoming obsessed with maximizing our time — and with, in a word, productivity.

The Growth of Time-Saving Technology

Technology became a bigger part of the picture, too. With the invention of the personal computer in the 1970s and the World Wide Web in the 1980s, productivity solutions were becoming more digital. Microsoft, founded in 1975, was one of the first to offer them, with a suite of programs released in the late 1990s to help people stay organized, and integrate their to-do lists with an increasingly online presence.

Source: Wayback Machine

It was preceded by a 1992 version of a smartphone called Simon, which included portable scheduling features. That introduced the idea of being able to remotely book meetings and manage a calendar, saving time that would have been spent on such tasks after returning to one’s desk. It paved the way for calendar-ready PDAs, or personal digital assistants, which became available in the late 1990s.

By then, the idea of productivity was no longer on the brink of becoming an industry — it was an industry. It would simply become a bigger one in the decades to follow.

The Early 2000s

The Modern To-Do List

Once digital productivity tools became available in the 1990s, the release of new and improved technologies came at a remarkable rate — especially when compared to the pace of developments in preceding centuries.

In addition to Microsoft, Google is credited as becoming a leader in this space. By the end of 2000, it won two Webby Awards and was cited by PC Magazine for its “uncanny knack for returning extremely relevant results.” It was yet another form of time-saving technology, by helping people find the information they were seeking in a way that was more seamless than, say, using a library card catalog.

In April 2006, Google Calendar was unveiled, becoming one of the first technologies that allowed users to share their schedules with others, helping to mitigate the time-consuming exchanges often required of setting up meetings. It wasn’t long before Google also released Google Apps for Your Domain that summer, providing businesses with an all-in-one solution — email, voicemail, calendars, and web development tools, among others.

Source: Wayback Machine

During the first 10 years of the century, Apple was experiencing a brand revitalization. The first iPod was released in 2001, followed by the MacBook Pro in 2006 and the iPhone in January 2007 — all of which would have huge implications for the widespread idea of productivity.

2008 – 2014

Search Engines That Talk — and Listen

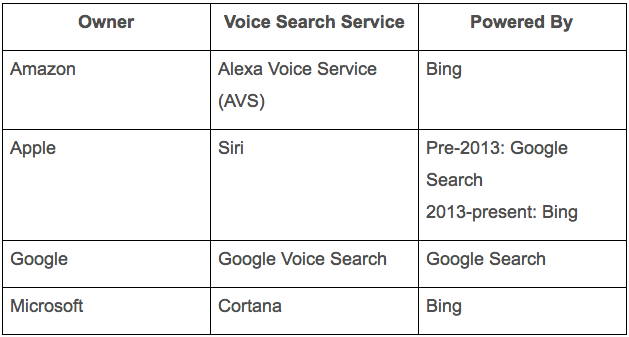

When the iPhone 4S was released in 2011, it came equipped with Siri, “an intelligent assistant that helps you get things done just by asking.” Google had already implemented voice search technology in 2008, but it didn’t garner quite as much public attention — most likely because it required a separate app download. Siri, conversely, was already installed in the Apple mobile hardware, and users only had to push the iPhone’s home button and ask a question conversationally.

But both offered further time-saving solutions. To hear weather and sports scores, for examples, users no longer had to open a separate app, wait for a televised report, or type in searches. All they had to do was ask.

By 2014, voice search had become commonplace, with multiple brands — including Microsoft and Amazon — offering their own technologies. Here’s how its major pillars look today:

The Latest Generation of Personal Digital Assistants

With the 2014 debut of Amazon Echo, voice activation wasn’t just about searching anymore. It was about full-blown artificial intelligence that could integrate with our day-to-day lives. It was starting to converge with the Internet of Things — the technology that allowed things in the home, for example, to be controlled digitally and remotely — and continued to replace manual, human steps with intelligent machine operation. We were busier than ever, with some reporting 18-hour workdays and, therefore, diminishing time to get anything done outside of our employment.

Here was the latest solution, at least for those who could afford the technology. Users didn’t have to manually look things up, turn on the news, or write down to-do and shopping lists. They could ask a machine to do it with a command as simple as, “Alexa, order more dog food.”

Of course, competition would eventually enter the picture and Amazon would no longer stand alone in the personal assistant technology space. It made sense that Google — who had long since established itself as a leader in the productivity industry — would enter the market with Google Home, released in 2016, and offering much of the same convenience as the Echo.

Of course, neither one has the same exact capabilities as the other — yet. But let’s pause here, and reflect on how far we’ve come.

2015 to 2020

Smart Devices are Everywhere

The Amazon Echo was just the beginning of smart devices that could help us plan out our day. We now have smart thermostats that schedule our heating and cooling, refrigerators that notify us when we're low on food, TVs with every streaming service we need, and a handful of other appliances that schedule themselves around on our lifestyle.

While some might worry that smart devices could limit our level of motivation and productivity, others might disagree. Smart devices often free us up from mundane tasks while allowing us more time to focus on more productive things that are more important.

Big Data Powers Business Productivity

With technology like artificial intelligence, automation, analytics tools. and contact management systems, we are now able to gather more data about our audiences and customers quickly with the click of just a few buttons. This data has allowed marketers, as well as strategists in other departments to build tactics that engage audiences, please customers, generate revenue, and even offer major ROI.

Want to see an example? Here's a great case study on how one successful agency used AI and analytics software to gather, report, and strategize around valuable client data.

Offices Rely on Productivity Tools

We've come a long way from Google Calendar. Each day, you might use a messaging system like Slack, a video software like Zoom, or task-management tools like Trello, Asana, or Jira to keep your work on track.

Aside from keeping employees on task, these tools have been especially important for keeping teams connected and on the same page. As modern workplaces increasingly embrace remote and international teammates, they're also investing in digital task management and productivity tools that can keep everyone in the loop.

Looking to boost your digital tool stack? Check out this list of productivity tools, especially if you're working remotely.

Where Productivity Is Now — and Where It's Going

We started this journey in the 1700s with Benjamin Franklin’s to-do list. Now, here we are, over two centuries later, with intelligent machines making those lists and managing our lives for us.

Have a look at the total assets of some leaders in this space (as of the writing of this post, in USD):

- Amazon: $3,051.88

- Apple: $384.760

- Google: $1,538.37

- Microsoft: $203.90

Over time — hundreds of years, in fact — technology has made things more convenient for us. But as the above list shows, it’s also earned a lot of money for a lot of people. And those figures leave little doubt that, today, productivity is an industry, and a booming one at that.

Editor's Note: This blog post was originally published in January 2017, but was updated in July 2020 for comprehensiveness and freshness.

![]()

Recent Comments